o3 - sense of intelligence?

o3 has come to light. 25% on the benchmark in the AGI test. Everyone is shouting that now the revolution has come, now we'll start living better.

There are nuances.

First, what's the point of AGI benchmarks if the model (most likely) was trained on data from similar benchmarks to perform better on these very tests.

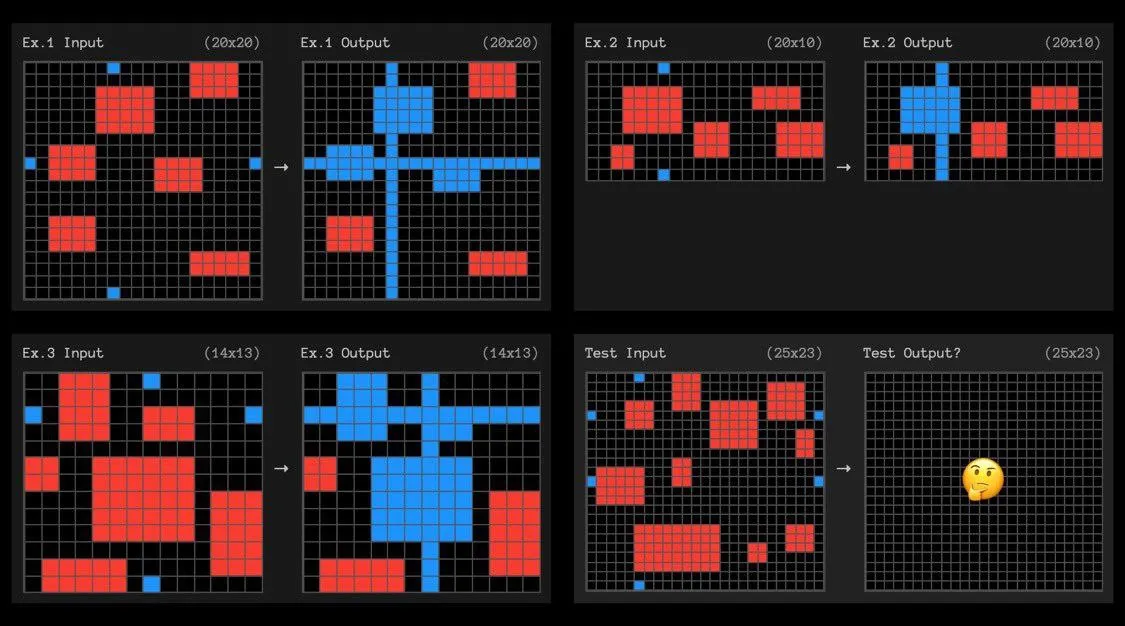

Second, the whole essence of the term AGI is artificial general intelligence. It shouldn't rely on specific tasks, it should be able to do everything - like a human. If AGI can't solve a basic connect-the-dots task that a 5-year-old child can handle, what kind of AGI are we talking about?1

Third, AGI should have sense in addition to intelligence. If a model doesn't understand a common everyday situation (this is my personal AGI benchmark) that any person can understand - it's a poor model. If everyone claims this is AGI and it should be very smart - why can't all models explain the meaning of this picture? The latest tests were conducted on o1 and all weaker models, and I don't think o3 will handle this better. For the same reason - lack of sense - the model is not capable of creative ideas.

From my words, one might think that I have something against this technology and am not happy about progress at all, but that's not the case. I very much want all this to evolve and become more accessible. These technologies open up a truly wide range of automation and coverage of different tasks, but it's too overvalued in the media, propagating the imminent "extinction" of ordinary professions. There's too much digital noise that should be reduced to preserve one's mental health.

We're still too early.

- We won't theorize about whether there are any corner cases here or not. There are clear task examples, and if the model cannot solve them based on previous examples, that's a weak indicator.